UltraLong-8B: Efficient Training of Ultra-Long Context Language Models

UltraLong-1M 🤗 UltraLong-2M 🤗 UltraLong-4M 🤗

We introduce UltraLong-8B, a series of ultra-long context language models designed to process extensive sequences of text (up to 1M, 2M, and 4M tokens) while maintaining competitive performance on standard benchmarks. Built on Llama-3.1, UltraLong-8B leverages a systematic training recipe that combines efficient continued pretraining with instruction tuning to enhance long-context understanding and instruction-following capabilities. This approach enables our models to efficiently scale their context windows without sacrificing general performance.

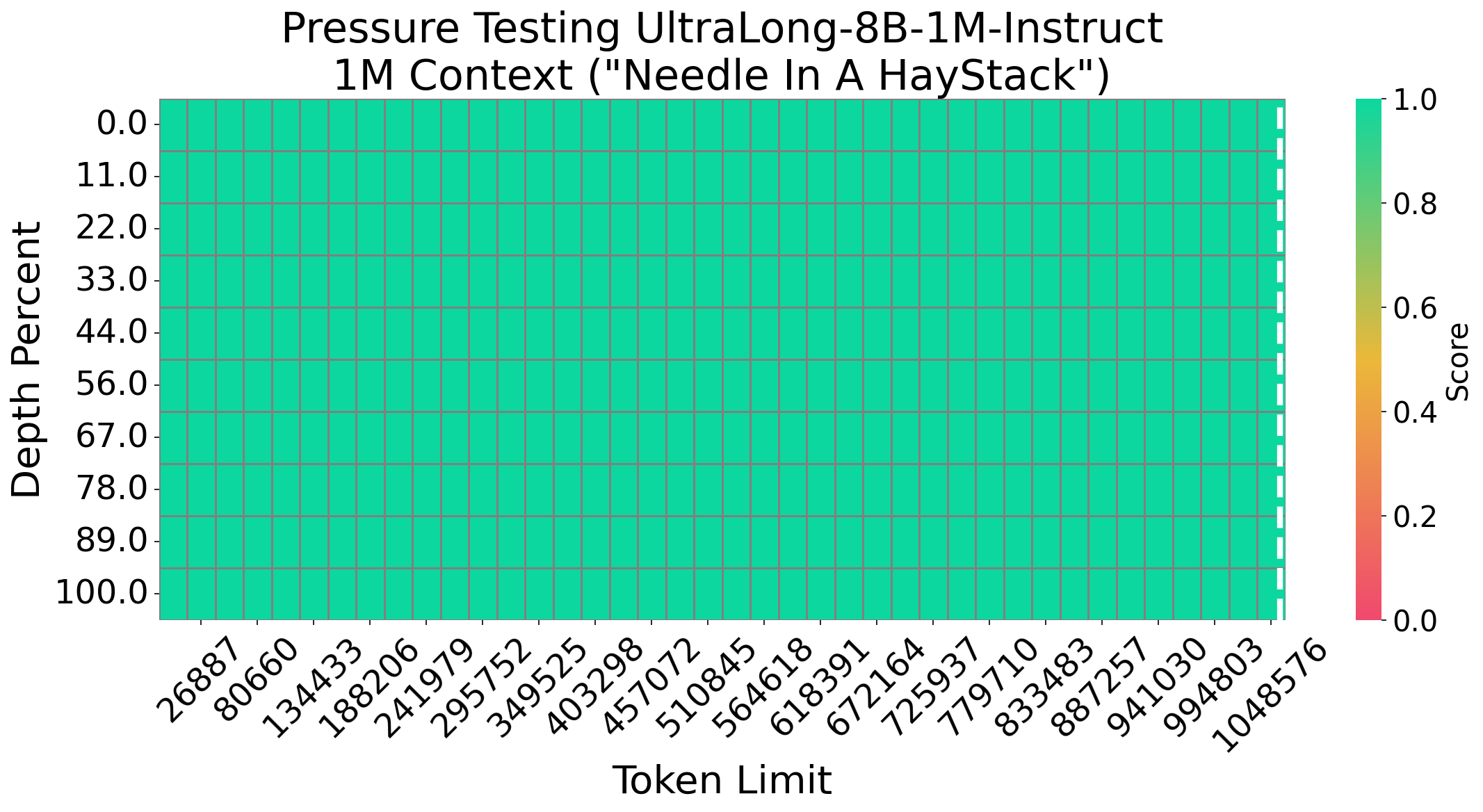

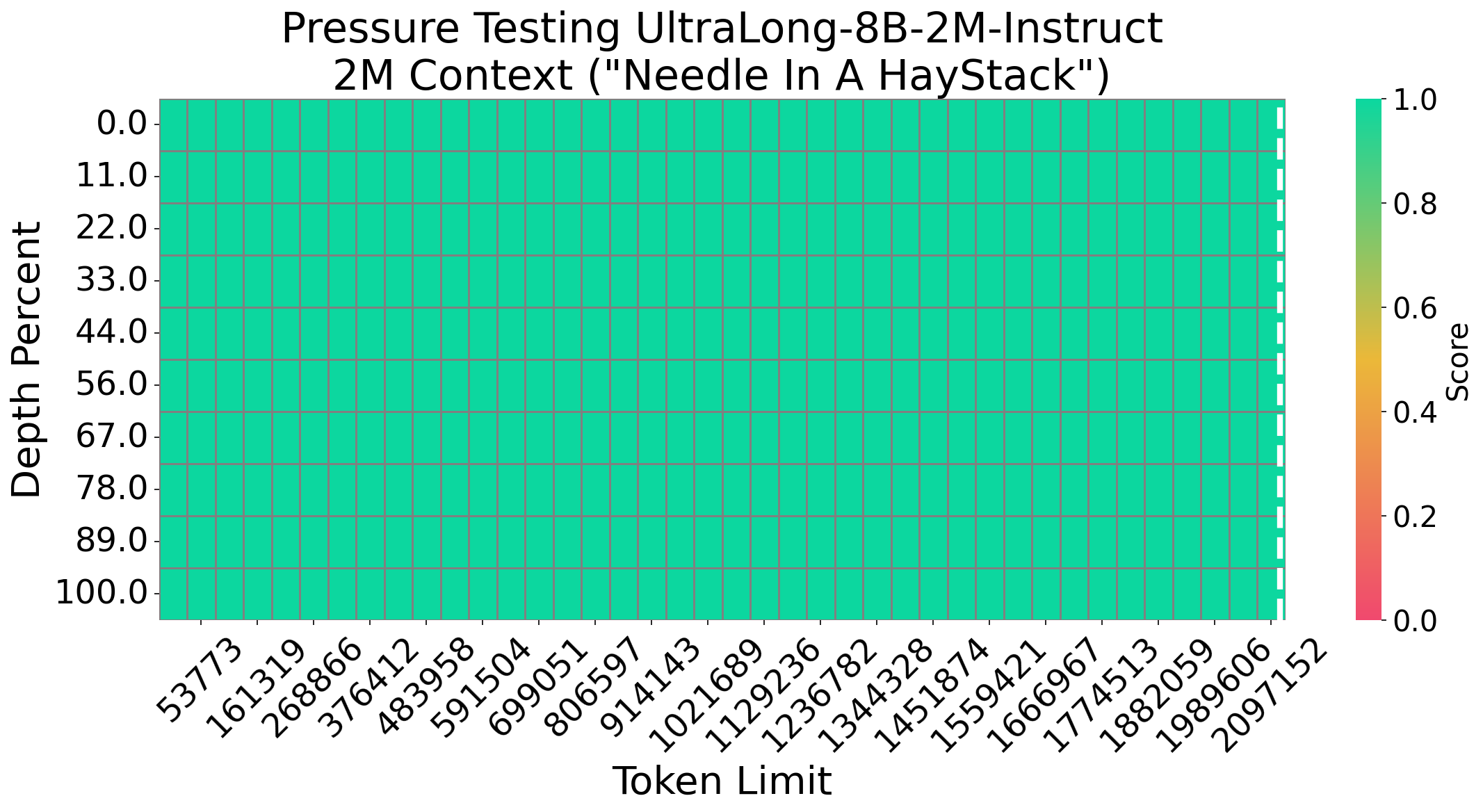

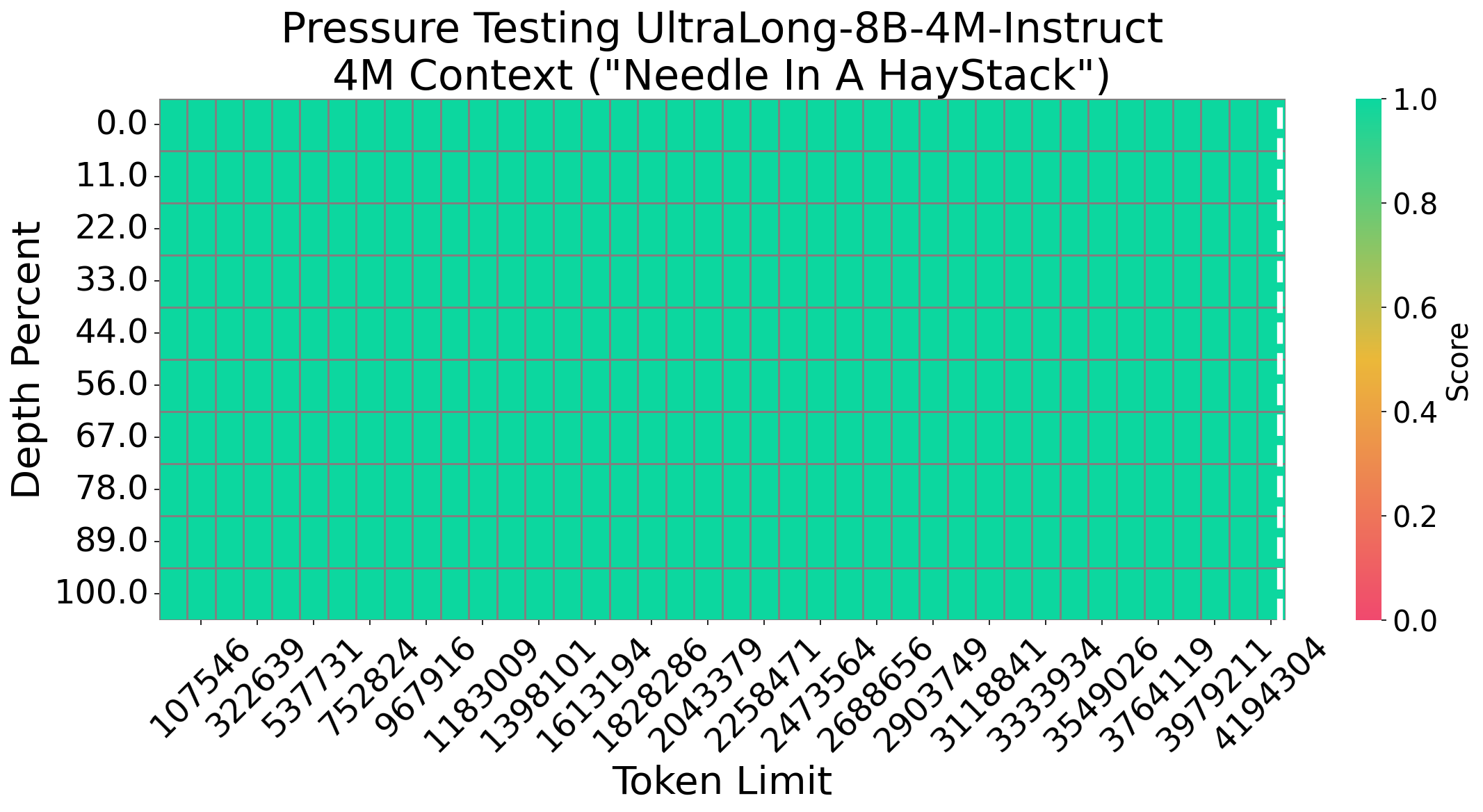

Needle In A Haystack

We evaluate UltraLong-8B using the Needle In A Haystack (NIAH) test—a popular benchmark for assessing long-context retrieval. Our model consistently achieves 100% accuracy across various sequence lengths and document depths, demonstrating its robust long-context retrieval capability.

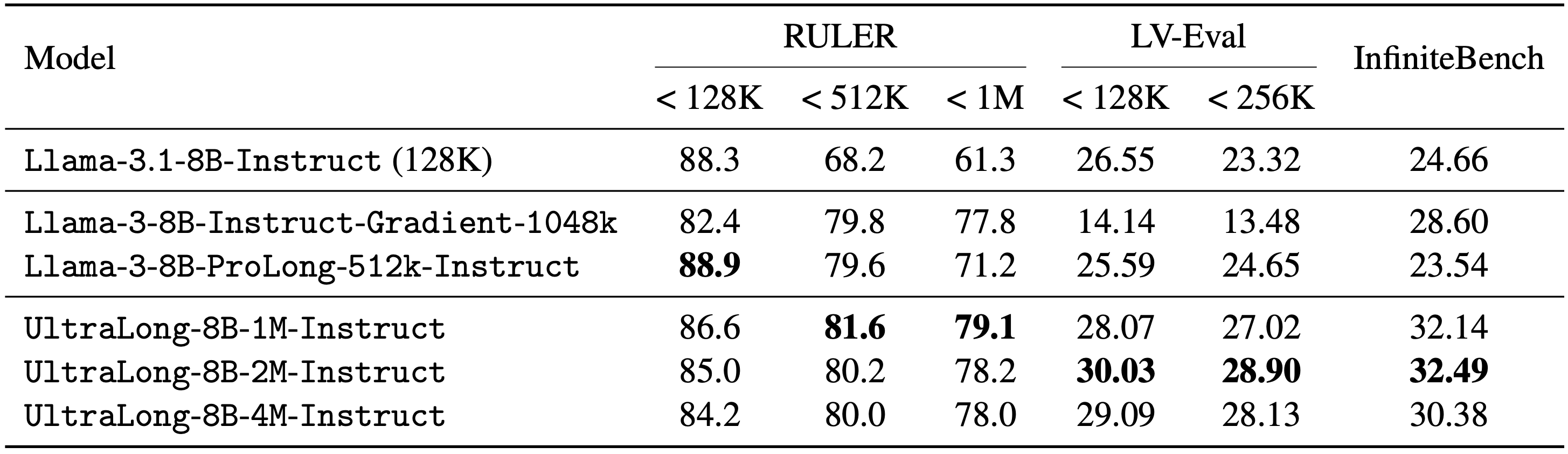

Long Context Evaluation

UltraLong-8B achieves superior results on real-world ultra-long tasks, outperforming existing models on benchmarks that require processing inputs beyond 128K tokens.

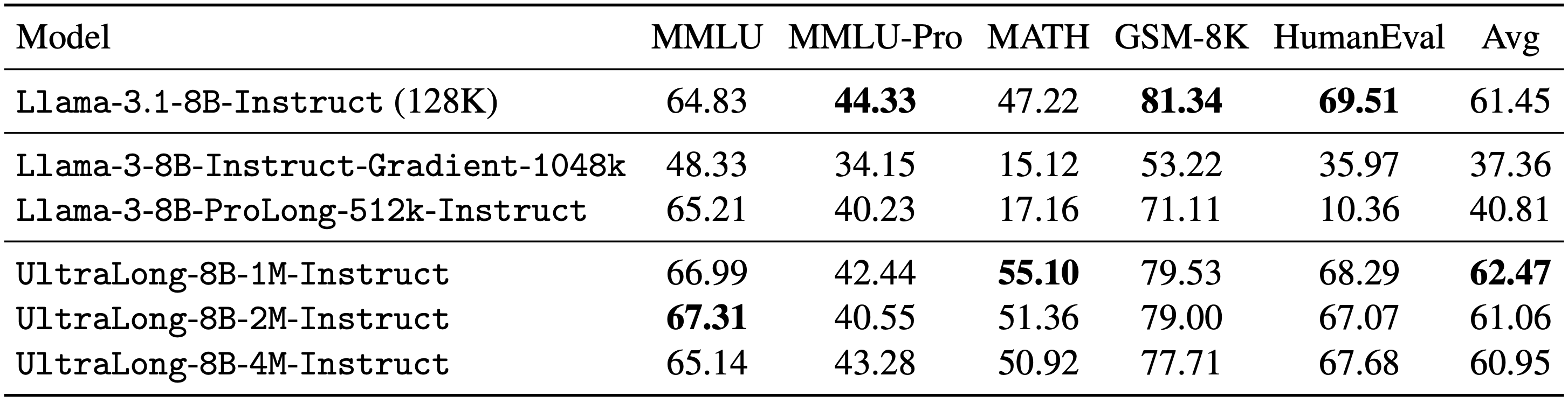

Standard Capability Evaluation

In addition to its long-context capabilities, UltraLong-8B maintains competitive performance on standard benchmarks, demonstrating balanced improvements for both long and short input tasks.

Uses

import transformers

import torch

model_id = "nvidia/Llama-3.1-8B-UltraLong-1M-Instruct"

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

outputs = pipeline(

messages,

max_new_tokens=256,

)

print(outputs[0]["generated_text"][-1])

Correspondence

Chejian Xu (chejian2@illinois.edu), Wei Ping (wping@nvidia.com)

Citation

@article{xu2025128k,

title={From 128K to 4M: Efficient Training of Ultra-Long Context Large Language Models},

author={Xu, Chejian and Ping, Wei and Xu, Peng and Liu, Zihan and Wang, Boxin and Shoeybi, Mohammad and Li, Bo and Catanzaro, Bryan},

journal={arXiv preprint arXiv:2504.06214},

year={2025}

}